So here you are, starting a new project file of your automated testing tasks, and instead of writing good, reusable, and effective test scripts, you just let it all go.

Maybe you realize writing them consumes a lot of time and energy and you have to learn too much about automated testing and test implementation. Maybe you want to follow the “just ship it” method to improve your code base by the feedback from the very first execution results. Maybe you realize aiming for success and perfection requires simply too much effort, so it’s not even worth it. Or maybe you just want to torture yourself or annoy others.

We don’t judge you; it doesn’t matter why you want to produce some complicated, messy, and ineffective scripts. I’m sure a lot of my dear readers have already written some at the beginning of their careers, worthy of owning up to on Coding Confessional. But why waste your time doing it on your own? Save yourself the hassle. Here are the best practices to ruin your tests completely—straight from the expert.

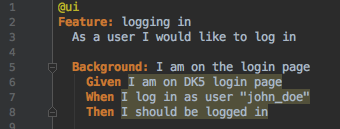

For this exercise, we will use behavior-driven test scripts where you describe the test scenarios in a pseudo-English script language, like Gherkin. We’ll also use the Cucumber framework for integration purposes (as you can read about in the very handy The Cucumber for Java Book).

Don’t Group the Test Cases

Although being able to add test inputs and expected outputs in a table, row by row, is a powerful tool of Gherkin, avoid using the scenario outline feature. Instead of collecting the example values to a structured and more readable expression, just copy and paste the whole scenario with the changed values again and again. This will produce more and longer feature files and less understandable steps. It’s easy to see the examples feature would ease the maintenance of the test cases and could also demonstrate the logic you implemented easily, even to non-IT stakeholders in your project.

Don’t Develop in Layers

Some people build a layer for the test steps themselves in a BDD language like Gherkin, another layer for the step definitions, and a third layer to the execution code itself—the latter ones written in Java or another high-level programming code—all in the name of reusability. This structure would produce helpfully reusable code fragments, so others could understand and use your code after one or two hours of explanation. We don’t want that. Instead, dump everything in a huge file to make it almost impossible to modify.

Don’t Comment Your Code

The behavior-driven code is pretty self-explanatory. There’s no need to comment the code because the describing language was created to imitate real-life texts, right? Furthermore, a good IDE connects the references between the feature and step definition files, and even the logic of the scenarios can be read from the feature files ... more or less. If your colleagues can’t work with your code, let it be their concern.

Don’t Use General Variables

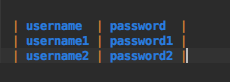

Don’t ever try to use general variables, where the value can be easily replaced. Use a seperate variable for everything; be as specific as you can. Or you can even burn login or account information into the code. This way, you can ensure nobody will dare to change a thing in your code.

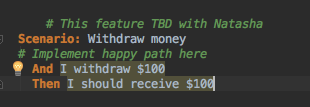

Don’t Verify the Expected Result

For a simple test, it’s enough if the emulator clicks in the objects you desire to test. There’s absolutely no need to check the output against the expected result at the end. Determination of the expected result required too much planning effort beforehand. The test will probably pass, anyway. (No, don’t write a line printing out “Passed” at the end, ignoring the real result—that’s too lazy, even for us.)

Mix the Output

Print out some outputs to the screen, others to a text file, and the remaining ones in a .csv file. Compare them manually, building in a robust and slow step to the test execution. The best is if you write the results “Passed” and “Failed” manually, after checking every partial result thoroughly. It will consume a lot of time, but hey, automation saved you some anyway. Setting up a retrievable monitoring system is definitely overkill. Where’s the joy of starting and evaluating the tests manually?

Don’t Use Graceful Shutdown

At the end of the test scenarios, don’t implement graceful shutdown for failed test results. And don’t take the trouble of closing the session, logging out your test user, or closing your running processes. The test environment is for testing, right? Everybody should know if you overload the system with the automated tests, even if a large portion of them fail.

Good luck, and remember: There’s no code that can’t be worse! Besides, AI is just going to take over all this code in a few years, anyway.

User Comments

How to ruin your automated checks suite? First step: use BDD.

I challenge everyone who thinks otherwise to send me an email to mz (at) miroslaw-zalewski.eu . I am waiting for anything that proves that using BDD is better (in any quantifiable meaning of the word) than not using BDD. Compare two similar projects, one using BDD and one not. See how fast they can deliver, which one has more bugs, which bugs are more severe, where each of them wastes resources and how much. See if BDD really makes business analyst more involved, if it solves the problem of outdated or ambiguous requirements. Or introduce BDD in the middle of existing project and see how it fares. Whatever, I'll leave details out to you - but I want to see to see hard data refuting the hypothesis that BDD is a waste of time.

Thank you, Sir! Although I couldn't find any research about the benefits of BDD, I think it's a test automation approach just like the others. At some point, youjust have to choose one.

Agreed. Weigh the pro's and con's for the work being done by the team in place and find the solution that best fits their needs. When all things are equal, which they generally are, just pick the solution which the majority of people are willing to adopt.

At the end of the test scenarios, don’t implement graceful shutdown for failed test results. And don’t take the trouble of closing the session, logging out your test user, or closing your running processes. The test environment is for testing, right?

Print out some outputs to the screen, others to a text file, and the remaining ones in a .csv file. Compare them manually, building in a robust and slow step to the test execution.