If you’ve ever looked at test results, you’ve probably seen something like this:

What does this really tell you?

- There’s a total of 53,274 tests cases

- Almost 80% of those tests (42,278) passed

- Over 19% of them failed

- About 1% did not execute

But would you be willing to make a release decision based on these results?

Maybe the test failures are related to some trivial functionality. Maybe they stem from the most critical functionality: the “engine” of your system. Or maybe your most critical functionality was not even tested at all. Trying to track down this information would require tons of manual investigative work and yield delayed, often inaccurate answers.

In the era of agile and DevOps, release decisions need to be made rapidly—preferably, even automatically and instantaneously. Test results that focus solely on the number of test cases leave you with a huge blind spot that becomes absolutely critical—and incredibly dangerous—when you’re moving at the speed of agile and DevOps.

A New Currency for Testing

Test coverage wouldn’t be such a bad metric if all application functions and all tests were equally important. However, they’re not. Focusing on the number of tests without considering the importance of the functionality they’re testing is like focusing on the number of stocks you own without any insight into their valuations.

Based on the test results shown above, you can’t tell if the release will ignite a software-fail scenario that gets your organization into the headlines for all the wrong reasons. If you want fast, accurate assessments of the risks associated with promoting the latest release candidate to production, you need a new currency in testing: Risk coverage needs to replace test coverage.

Test coverage tells you what percentage of the total application functions are covered by test cases. Each application has a certain number of functions; let’s call that n functions:

However, you probably won’t have time to test all functions. You can test only m of the available n functions:

You would calculate your test coverage as follows:

For instance, if you have 200 functions but tested only 120 of those functions, this gives you 60% test coverage:

Risk coverage tells you what percentage of your business risk is covered by test cases.

Risk coverage accounts for the fact that some tests are substantially more important than others, and thus have a higher risk weight than the others.

With risk coverage, the focus shifts from the number of requirements tested to the risk weight of the requirements tested. You can usually achieve much higher risk coverage by testing 10 critical requirements than you can by testing 100 more trivial ones.

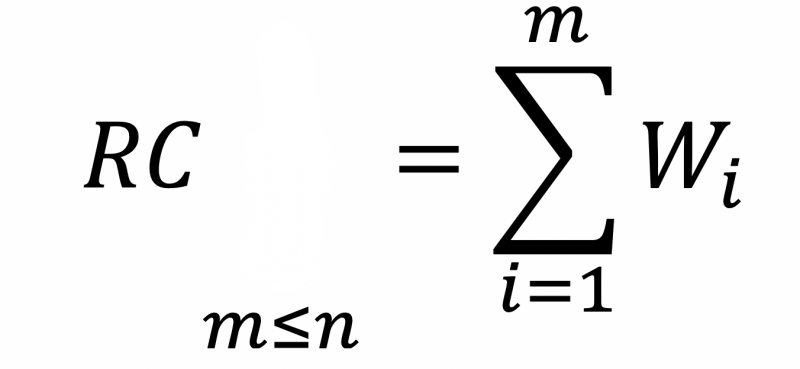

The sum of all risk weights always totals 100%:

If you add up the risk weights for the m requirements that have been tested, this gives you the risk coverage RC:

For a simple example, assume that the risk weights of your core requirements are as follows:

If you fully cover the Capture Order requirement, you’ll achieve 80% risk coverage. If you cover the other two requirements, Rectify Order and Cancel Order, you’ll achieve 20% risk coverage. In other words, by fully covering the Capture Order requirement, you get four times the amount of risk coverage with half as much work. This is a prime example of “Test smarter, not harder.”

By measuring risk coverage, you gain insight into:

- How rigorously your top business risks were tested

- Whether your top risks are meeting expectations (based on the correlated testing outcomes)

- The severity of your “blind spot”: the percentage of your business risk that is not tested at all

For example, consider the following results:

We don’t worry about the number of test cases here because we have much more powerful insight: We can tell that only 66% of our Core Bank business risk is tested and appears to be working as expected. Additionally, we know that the functionality for 9% of our business risk seems to be broken, the functionality for 15% of our business risk has tests that aren’t running, and the functionality for 10% of our business risk doesn’t have any tests at all. This means that at least 9%—and potentially 34%—of the functionality for our business risk is not working in this release.

Would you rather know this information, or that 53,274 tests were executed and almost 80% passed?

Now, let’s return to our earlier question: Are you confident promoting this release to production?

All Tests Are Not Created Equal

The reason traditional test results are such a poor predictor of release readiness boils down to the 80/20 rule, also known as the Pareto principle. Most commonly, this refers to the idea that 20% of the effort creates 80% of the value.

The software development equivalent is that 20% of your transactions represent 80% of your business value—and that tests for 20% of your requirements can cover 80% of your business risk.

Most teams already recognize that some functionality is more important to the business, and they aim to test it more thoroughly than functionality they perceive to be more trivial. Taking the alternative path—trying to test all functionality equally, regardless of its perceived risk—soon becomes a Sisyphean effort. With this route, you quickly approach the “critical limit”: the point where the time required to execute the tests exceeds the time available for test execution.

However, when teams try to intuitively cover the highest risks more thoroughly, they tend to achieve only 40% risk coverage, and end up accumulating a test suite that has a high degree of redundancy (aka “bloat”). On average, 67% of tests don’t contribute to risk coverage—but they make the test suite slow to execute and difficult to maintain.

With an accurate assessment of where your risks lie, you can cover those risks extremely efficiently. This is a huge untapped opportunity to make your testing more impactful. If you understand how risk is distributed across your application and you know which 20% of your transactions are correlated to that 80% of your business value, it’s entirely feasible to cover your top business risks without an exorbitant testing investment.

This translates to a test suite that’s faster to create and execute, as well as less work to maintain. Add in the appropriate automation and correlation, and you’ll reach the “holy grail”: fast feedback on whether a release has an acceptable level of business risk.

User Comments

I don't think that we need to replace "test coverage" type of test suites with 'risk coverage'-based test suites. Both approaches (testing against requirements and testing against the risks) are needed in good test suites.

"Test coverage" is an empty term unless it is said what factor/item/... is covered by a test suite. Thus "Risk coverage needs to replace test coverage." does not make sense. Different types of coverages are used, risk coverage is one of them, requirement coverage the other.