Containers support the timely delivery of a quality software application. However, the change to a DevOps process involving containers will require testers to adapt to this new, more agile environment. What does that mean for testers and the work they do? Here's how testers can embrace these changes, containers, and DevOps.

Google Trends shows that searches for “DevOps” have more than doubled over the past twelve months. Many leading software organizations, including Google and Facebook, have built their own tools to assist in scaling development and operations, collaboration, and automation to achieve almost continuous deployment of code. Some of them are giving their tools away as open source. Others are still figuring out DevOps and how it will impact the way they code, test, and deploy software.

For teams just starting with DevOps, the wealth of information can be both empowering … and a little intimidating. Of those topics, one of the most popular is containers and their effect on building and deploying software.

Containers have been around in some form or another since the 1980s, but the open source tool Docker, combined with free and easy Linux tools (such as Jenkins, Chef, and Puppet) and cloud services, recently have made adopting containers quick, easy, and even cheap.

But What Are Containers, and How Do We Use Them?

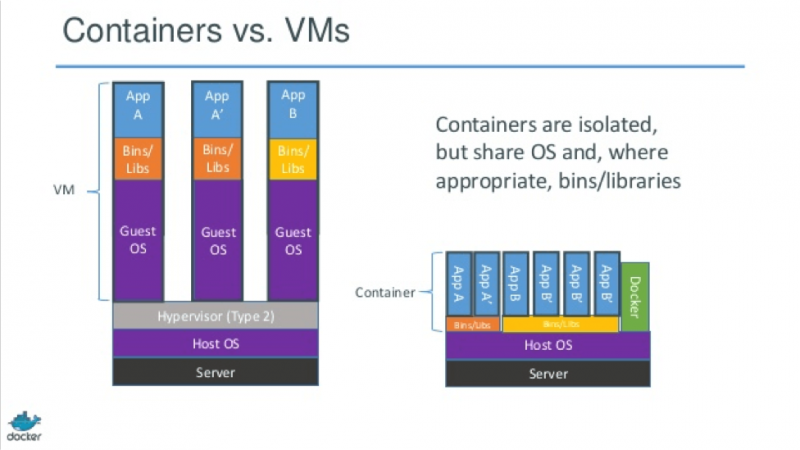

For our purposes, a container is a virtual machine that runs on the same operating system as your computer. Instead of copying a new operating system, Docker can reuse large portions of it and “hook into” the task-switching parts, as well as share dependencies across each application’s container:

What Does That Mean for Testing?

We used to have a tool like Jenkins to create a build. The first thing we did as testers was pull that build down, install it, and use our modified servers as a “test.” With containers, we save the entire machine that will run in production, flip it on, and run it. This means the testing environment will be identical to the production environment, and you’ll even be able to run multiple builds on your machine at the same time.

Setting up a virtual machine now takes only a minute; there’s no more fighting to get the fix for sprint 21 up on the staging server while everyone else tests sprint 22. Have a problem in production? Put it in Docker, pull it down, and reproduce it locally. The number of production errors you find but are unable to reproduce in a test environment will go down dramatically.

Containers have the ability to support the timely delivery of a quality software application. However, the change to a DevOps process involving containers will require testers to adapt to this new, more agile environment.

How Testers Can Embrace the Change

Today, many manual testers are tasked with testing new builds with sanity or smoke tests to validate whether the core functionality of the application is retained with the new build. This is a critical process for companies building deployments manually so that they can validate that all the major artifacts are installed and configured properly.

In a continuous deployment process utilizing containers, the installation and configuration of dependencies is automated, removing much (if not all) of the need for those basic tests. Additionally, most DevOps pipelines will leverage automated build testing through continuous integration tools like Jenkins. Manual testers who don’t want to transition to providing more automated test coverage need to add value in some other way.

My suggestion for succeeding in a new, containerized world? Focus on becoming experts in the business use cases related to the software. New testing techniques focused around user experience and agility, such as exploratory testing, are also a popular way to increase the value of manual testing to DevOps organizations.

Too often, automated testers are building complex, UI-driven tests that take too long to complete and are brittle, breaking frequently. In the world of infrequent, manual deployments, this doesn’t slow things down much because things were going slowly in the first place. Now, the automated tests provide enough time to debug and fix flaky test results.

When transitioning to continuous development, builds are typically much more frequent and will require quick tests that can be run in parallel and are robust (not brittle). Given that containers can allow for easily creating multiple machines to run tests on, you’ll need to create tests that can run in parallel across these environments. Many tests can be transitioned from interacting with the UI to interfacing with APIs directly to allow for a less brittle test that completes in a fraction of the time. Finally, automation testers need to adopt a process that allows them to quickly identify script defects, quarantine those scripts, and fix them so that the build process is not hampered by issues within the test code.

Riding the Wave of Containers

Overall, containers should be embraced by teams looking to integrate and deploy more quickly and easily. However, manual and automated testers alike will be impacted by this shift, and they should respond accordingly to position themselves favorably ahead of the inevitable adoption of this new technology. Containers and the broader ecosystem of DevOps tooling provide a platform for improving agility and quality, but ultimately its success is dependent on knowledgeable and quality-focused professionals to implement it. So embrace containers (and DevOps) and ride the wave; it's as exciting a time as ever to be in software development and testing.

User Comments

Good thoughts. I definitely agree with the advice that (1) testers should focus more on the business cases, and (2) attention should be paid to the speed of execution of tests, often through running subsets of tests in parallel in multiple machines.

Some additional thoughts: the author mentions the use of tools like chef and puppet, but I have yet to see the value of those tools in a container-focused pipeline. They are simply superflous, because with containers one no longer needs to remotely deploy apps/software - instead, one deploys container images.

Another thought: the use of containers radically enhances the ability of developers to perform end-to-end testing locally, before they check in their code changes. Thus, the value of behaviorally driven development increases, and the value of TDD decreases: a developer should run a fast behavioral suite (end-to-end), using containers for each system component, locally before pushing their code. If one measures code coverage on the behavioral suite, one of the major benefits of TDD is replaced by the behavioral test suite. Not saying TDD is not useful - it is just less useful, and in many cases the costs (maintaining lots of tests) will now outweigh the benefts - but that is for a team to decide. My point is that BDD is more feasible with containers, since you can now stand up a clean test environment in a fraction of a second.