Based on lessons learned over years of performance testing, Jun Zhuang came up with a list of suggestions that might help individuals or teams who are new to the craft. This article delves into why you should always start from the simple; be a meticulous and active observer; and be diligent and prepared.

It has been six years since I started working on performance testing. Applications I have worked on include an electronic health records application used by more than two hundred thousand physicians nationwide and a backend application that processes millions of lab orders, results, and other messages daily. High availability, high reliability, and high performance are critical for both applications due to their time-sensitive nature.

From knowing very little to becoming the leading engineer of a performance testing team of five, I have learned a lot about this art—and at the same time have made many mistakes. Based on lessons learned from those mistakes, I have come up with a list of suggestions that might help individuals or teams who are new to performance testing or are interested in it.

My suggestions include the following (not listed in any specific order):

- Always start from the simple

- Be a meticulous and active observer

- Be diligent and prepared

- Get involved and make friends with people around you

- Promote awareness of performance testing

- Be disciplined

- Manage your time

- Seek guidance from the experienced

- Know a little bit about everything and keep learning

I will elaborate on these suggestions and explain why each is important here and in two follow-up articles.

Always start from the simple

Sometimes, trying to determine the cause of a performance issue in a fairly simple system is challenging enough, let alone in a complex system where data flow through many interfaces before reaching their final destinations. Therefore, when testing a new system or when investigating performance problems, it is better to start with the individual components.

During a release a couple of years ago, my regression test for a web service showed a linear increase in response time and that the database CPU usage stayed at 100 percent during the test. We devoted all the resources we could spare and attempted many fixes, including backing out all the changes made for that release, rebuilding indexes of a few heavy-hit tables, revisiting some of the queries, and running numerous tests to rule out potential causes. But all efforts were in vain.

I happened to be registered for a software testing conference that year and presented the problem to attendees during a round table session. One of the suggestions given was to simplify the problem—more specifically, to bypass the business layer by recording testing scripts using the JDBC protocol directly against the database and run them one at a time.

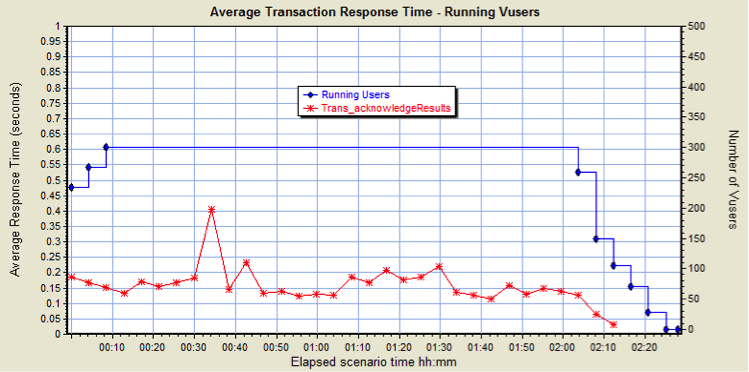

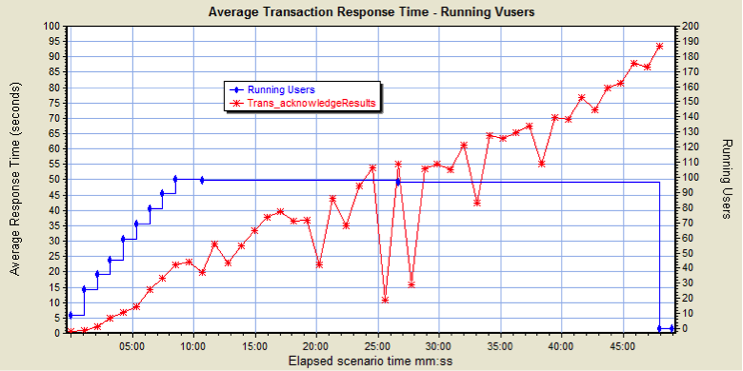

The problem turned out to be caused by one of the database tables the web service used to insert records. The response time deteriorated when that table was empty prior to starting a test; otherwise, the response time was normal with even a small number of records in that table. The following graphs show the comparison between normal and abnormal responses.

Normal response:

Abnormal response:

During the investigation we spent a lot of time on the code, which was logical and appropriate at that time, considering that we did not know exactly where the problem was. Inefficient code, connection pool usage, thread contention, and many other aspects of the application could have led to the degradation. But had we had a way to hit the database directly, we probably could have saved a lot of the guesswork and found the root cause much more quickly.

Be a meticulous and active observer

Many performance issues are not very obvious, so make sure you always look into subtle changes in measurements such as response time, throughput, and memory usage, and make sure you can explain them. Often it is a good idea to rerun the same test under the same conditions and see if the trend persists.

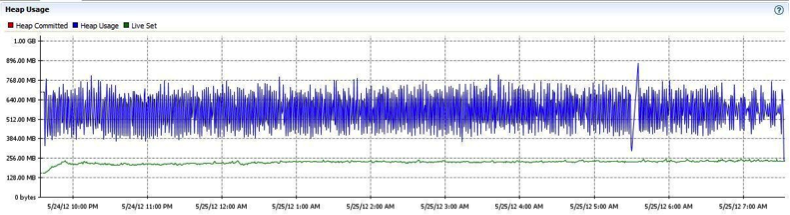

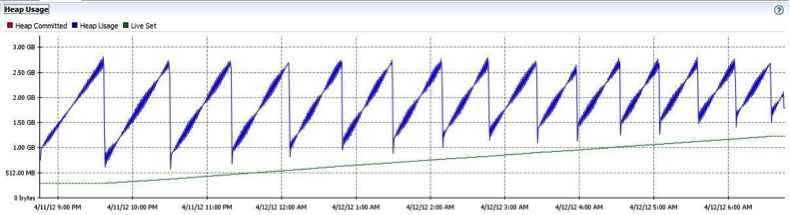

Take slow memory leak as an example. Suppose you ran a short test for a Java application and monitored the JVM usage of the application server. The live set and HEAP usage would show a slight but continuous increase over time. However, because the leak is slow, it probably will not jump out screaming there is a problem.

The following graphs show the HEAP usage from two ten-hour tests. The first one did not have a memory leak; the second one did. Problems such as this can be easily missed if you are not careful.

No memory leak:

Memory leak:

For the database issue I mentioned previously, we pretty much stumbled on the discovery, but we could have struggled more if my manager then were not such a meticulous observer. He did not have much knowledge about the load-testing tool, but he offered to run tests and analyze results when I was away at the conference. It was he who noticed the subtle database difference between good and bad tests.

Observation is the first step toward learning. Make sure you don’t stop at observing, but learn from what you observe. As you gain experience, you will gradually establish your own filter system to let you focus on areas that may have real performance concerns. This will help avoid raising too many false alarms.

While passive observers may simply accept everything they are told, active observers will always try to go beyond the surface until they run out of questions. This is one of the many attributes a good tester must possess.

Be diligent and prepared

When it comes to the question of whether to test something or how to test it, we need to be confident enough to draw our own conclusions based on our understanding of the system instead of relying on others telling us what we should do. We must do our due diligence and invest time learning every aspect of the system.

We also want to be diligent in saving testing artifacts for later reference. If you have worked in an agile environment you probably have heard the argument that no document is needed, but I strongly disagree. Say you have run test after test trying to pinpoint a performance issue but have not kept records, and then, a few days later, someone wants to know the performance characteristics and system configuration of a specific test. Good luck with your memory!

In terms of documentation, there are a few items that I consider must-haves:

- A detailed test design document listing why you need to run certain tests and how you are going to run them, test conditions of the system, test data, etc.

- Details of each test execution, including the date and time of the execution, test results, and system configuration details if the test can’t be easily tied back to the design document

- Decision-making processes that impact the design or execution of tests

- Work instructions for recurrent and time-consuming activities such as data preparation or setting up the testing system

Before presenting test results to other audiences, especially nontechnical ones, make sure you completely understand what you are about to show and you can explain any odd behaviors. If you are not sure, talk to a developer beforehand. Failing to prepare can lead to awkward situations. Some common questions people ask when reviewing test results are:

- How does the testing load compare to production?

- Can the system handle the projected load?

- What can be done to improve the system’s performance?

- What caused that spike in CPU/memory/response time?

Performance testing is more about planning and preparation than actual execution. The only way to design relevant and effective tests is by understanding how components of a system interact with each other and how end-users interact with the system. I understand it’s easier said than done, but there is no shortcut or workaround.

As Scott Barber once said when comparing performance testing and functional testing, “Not only are the skills, purpose, planning, scheduling, and tools different, but the entire thought process is different.”

User Comments

Great job, nice demo of a memory leak

Jun - I'm working on a research project, as part of my masters degree, to understand the alignment of performance testing tools to the market. Also looking at trends for toolsets.

We are in need of some subject matter expertise in this area and are reaching out to conduct 30 minute phone interviews. Do you know of anyone who could assist us in this?

Cheers

Hi Jeffrey,

Thanks for contacting me for your research project, I'd like to help but not sure how. I use whatever tool(s) suitable for my work but do not focus on any spceicfic one(s).

Jun